What Does Prompt-Based Learning Mean?

Prompt-based learning is a strategy that machine learning engineers can use to train large language models (LLMs) so that the same model can be used for different tasks without re-training.

Traditional strategies for training large language models such as GPT-3 and BERT require the model to be pre-trained with unlabeled data and then fine-tuned for specific tasks with labeled data. In contrast, prompt-based learning models can autonomously tune themselves for different tasks by transferring domain knowledge introduced through prompts.

The quality of the output generated by a prompt-based model is highly dependent on the quality of the prompt. A well-crafted prompt can help the model generate more accurate and relevant outputs, while a poorly crafted prompt can lead to incoherent or irrelevant outputs. The art of writing useful prompts is called engineering prompts.

Techopedia Explains Prompt-Based Learning

Prompt-based learning makes it more convenient for artificial intelligence (AI) engineers to use foundation models for different types of downstream uses.

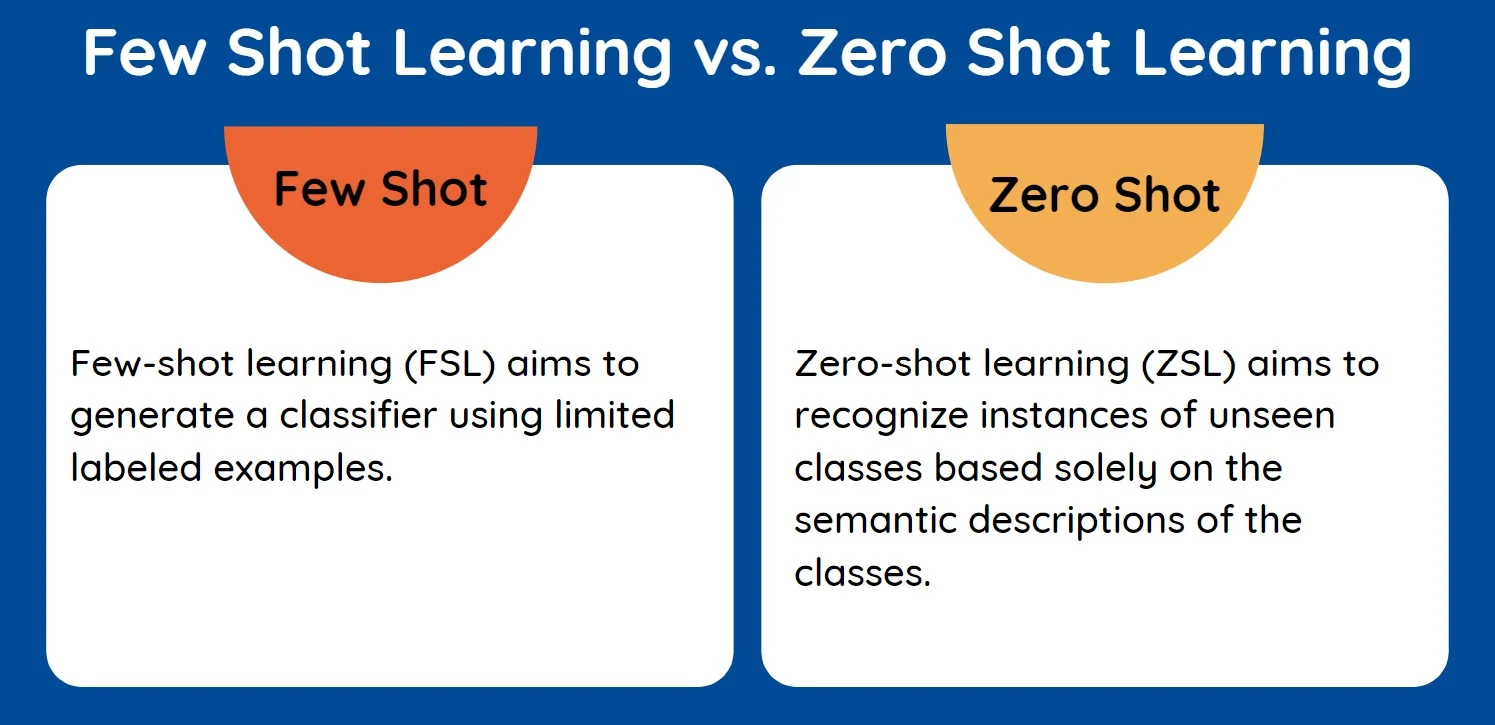

This approach to large language model optimization has led to increased interest in other types of zero-shot learning. Zero-shot learning algorithms can transfer knowledge from one task to another without additional labeled training examples.

Advantages and Challenges

Prompt-based training methods are expected to benefit businesses that don’t have access to large quantities of labeled data and use cases where there simply isn’t a lot of data to begin with. The challenge of using prompt-based learning is to create useful prompts that ensure the same model can be used successfully for more than one task.

Prompt engineering is often compared to the art of querying a search engine during the first days of the internet. It requires a fundamental understanding of structure and syntax — as well as a lot of trial-and-error.

ChatGPT and Prompt-Based Learning

ChatGPT uses prompts to generate more accurate and relevant responses to a wide range of inputs and is continuously being fine-tuned with user prompts that are relevant to a specific task at hand.

The process involves giving the model a prompt and then allowing it to generate a response. The generated output is then evaluated by a human evaluator, and the model is adjusted based on the feedback. The fine-tuning process is repeated until the model’s output are acceptable.